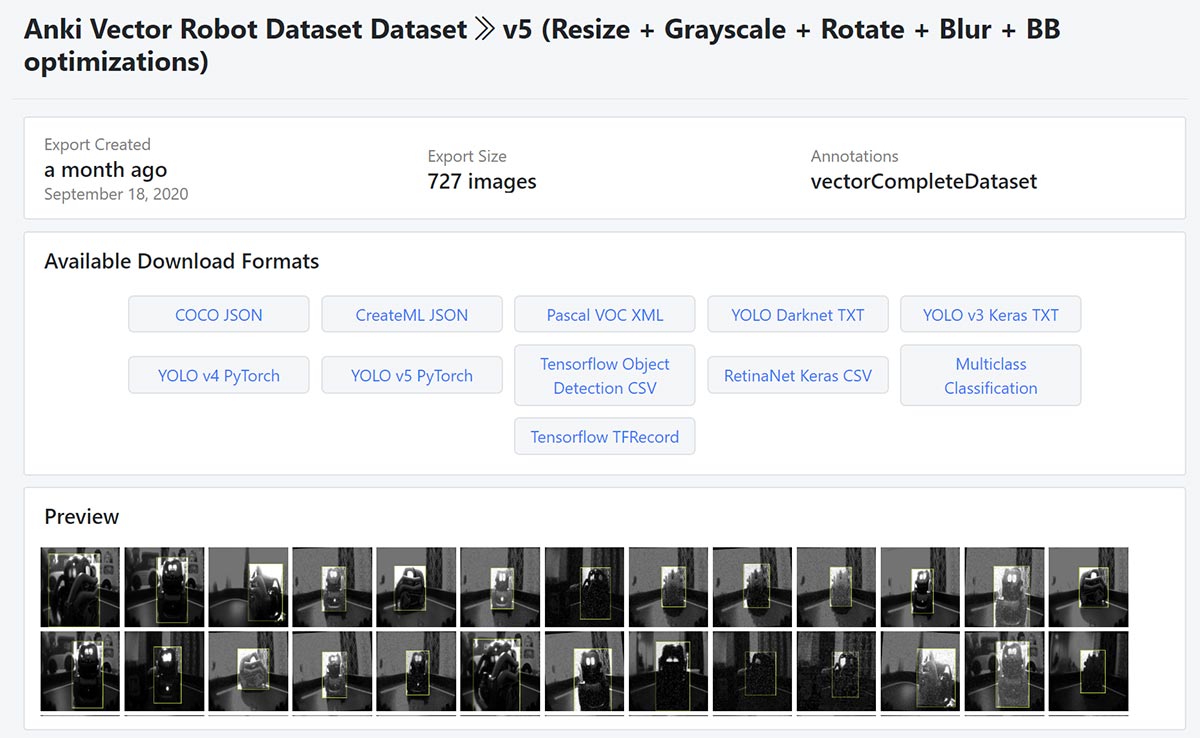

About a month ago Amitabha Banerjee has released a dataset of images on Roboflow.org showing a Vector from the camera of another Vector. This dataset can be used to train a Deep Learning setup to recognize Vector Robots. The raw version has 303 images and the reworked version has 727 processed images.

With the help of this dataset solutions can be implemented (for example in Tensorflow) to let one Vector recognize another Vector. This will become interesting especially in the moment Escape Pod will arive as we then may be able to create server based solutions that do not need to run on the robot itself. Usually the data files created by training a Deep Learning neurolan network are way too big to store them on the robot itself, so he needs the help of an external server solution.

Again I want to stress that most probably a Raspberry Pi may not be the ideal solution for an upcoming Escape Pod server, as the computational power of the otherwise great minicomputer is too small for complex deep learning-based tasks. I will use a Jetson Nano for experiments instead, it is more expensive (around 140 Euros), but way more capable for tasks like this.

I would like to learn how to control the vector

https://developer.anki.com/vector/docs/initial.html

the SDK is good but how does that pair into the OKSR? Does vector run as a compiled python file? on the linex system

No, he runs on a linux system. The Python SDK is only to communicate with the accessable API endpoints provided by the robot if you authenticate him against the Python SDK via Anki Account.

this is unbelievably cool. He doesn’t talk about how he uploaded this program though. Is he just taking Vector’s camera feed a processing it post-facto through Google Colab? This has nothing to do with real time data he could feed back to Vector using the OSKR, I’m assuming (though foolishly still hoping for).

There are multiple ways to get the camera feed, e.g. through one of the SDKs.

This can be used for Deep Learing apps or experiments with an external server.